Research

At the Zhan Lab, we build next-generation AI systems that connect language models, clinical reasoning, and biological discovery. Our mission is to enable trustworthy, interpretable, and interactive AI that transforms genomic medicine, precision health, and human-AI collaboration.

We believe foundational models—if properly secured and grounded—can revolutionize science and healthcare. Our research spans from rare variant discovery to secure genomics and autonomous surgical reasoning.

Grants

Supporting Undergraduate Research Experiences (SURE) Award

Funding Agency: New Mexico EPSCoR

Role: Principal Investigator

Co-Principal Investigator: Dr. Ramyaa

Project Title: Large Language Models for Code and Compiler Education: A Course-Based Undergraduate Research Experience

Period: 2025–2026

This project develops a course-based undergraduate research experience (CURE) that integrates large language models into code analysis and compiler education. The goal is to broaden participation in research by embedding authentic, AI-driven research activities directly into the undergraduate curriculum, while training students in modern AI-assisted software and systems education.

NM-INBRE Developmental Research Project Program (DRPP)

Funding Agency: NIH / National Institute of General Medical Sciences (via NM-INBRE)

Role: Principal Investigator

Project Title: REACT: Rehabilitation through Embodied Agentic Clinical Transfer

Period: 2025–2026

This seed-funded project explores agentic AI models for neurorehabilitation, focusing on embodied learning and clinical transfer. The work supports undergraduate and graduate research training at the intersection of artificial intelligence, health, and rehabilitation, aligned with the NM-INBRE mission to expand biomedical research capacity in New Mexico.

Primary Research Areas

- Variant Effect Prediction with Large Language Models

1.1 Disease-Specific Modeling

1.2 Adaptive Reasoning Models for Dynamic Reclassification - Trustworthy and Secure Genomic AI

2.1 Privacy Leakage in Foundation Models

2.2 Adversarial Robustness - Unified Generative AI for Surgery and Rehabilitation

3.1 Diffusion-Based Multimodal Surgical Gesture Prediction

3.2 Assistive Robotics for Rehab

1. Variant Effect Prediction with Large Language Models

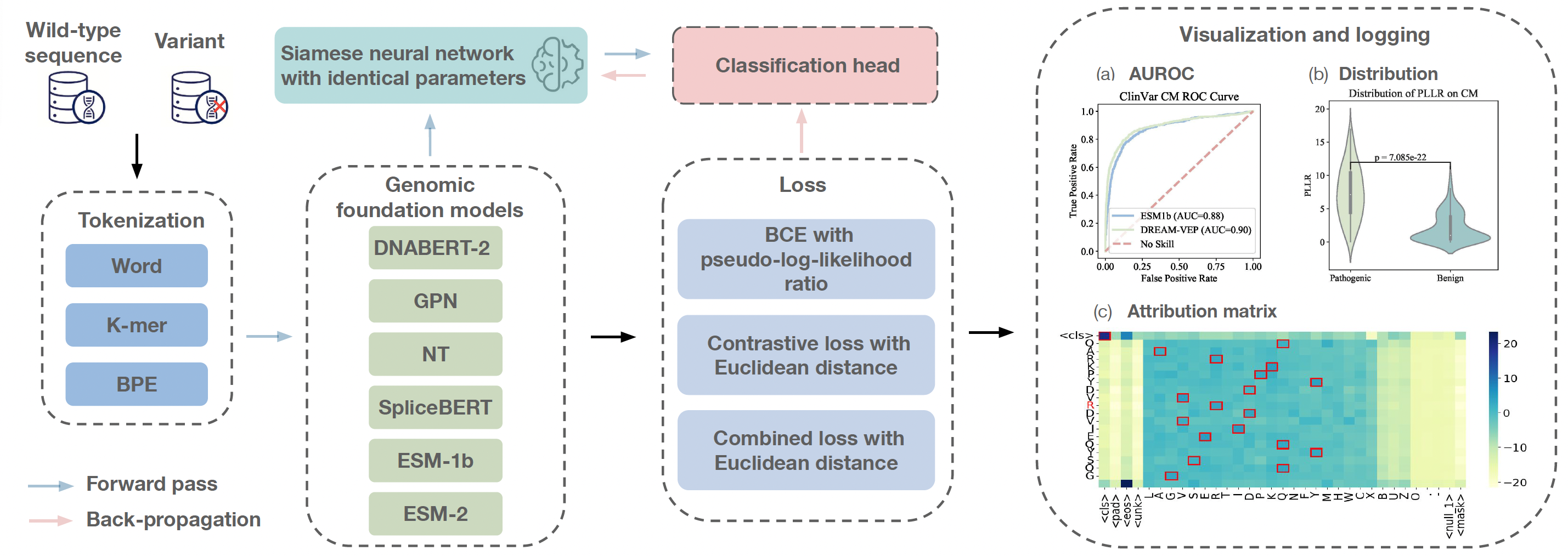

We develop disease-specific language models to predict the functional impact of genetic mutations. Our flagship system, DYNA, enables more precise variant interpretation for cardiac and regulatory genomics.

1.1 Disease-Specific Modeling

DYNA outperforms general-purpose LLMs by focusing on domain-specific signals in gene regulation and disease mechanisms. We work on calibration, zero-shot generalization, and clinically relevant variant filtering.

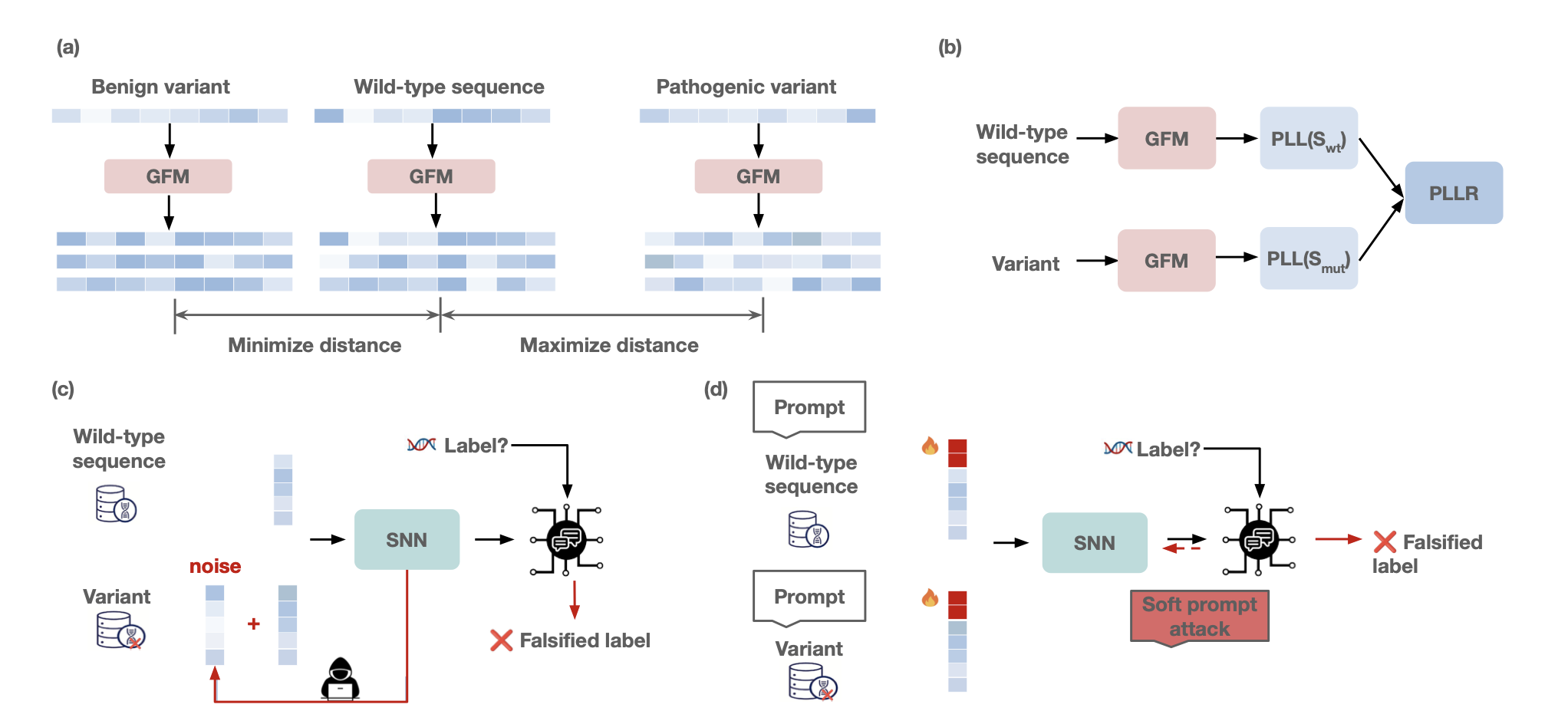

1.2 Adaptive Reasoning Models in Genomics: From DYNA to Dynamic Variant Reclassification

Building upon our prior work DYNA, a disease-specific language model for variant pathogenicity prediction, we propose a novel direction that introduces Adaptive Reasoning models into the field of genomic interpretation. This approach reconceptualizes variant effect prediction as a dynamic reasoning process—where predictions evolve over time as new clinical or biological evidence becomes available. This shift toward world modeling enables more flexible and forward-looking interpretation of variants of uncertain significance (VUS), particularly in underrepresented diseases such as cardiovascular disorders. It lays the groundwork for rethinking how uncertainty and evidence accumulation are handled in clinical genomics.

Figure. Illustration of our DYNA-WorldModel framework.

The model treats variant interpretation as an evolving state, enabling re-evaluation as additional information emerges. This paradigm is especially impactful for rare variant prioritization in contexts with limited labeled data.

Related publication:

2. Privacy and Security in Genomic AI

Genomic foundation models are powerful—but vulnerable. We study privacy risks and build robust learning frameworks to make genomic AI secure, reproducible, and fair.

2.1 Privacy Leakage in Foundation Models

Our recent work revealed privacy leakage in models like DNABERT-2. Using mutational probes, we demonstrate that even foundation models trained without labels can memorize sensitive genomic sequences. We introduce defense strategies such as mutational masking.

2.2 Adversarial Robustness

We test how robust genomic LLMs are under adversarial perturbations—like biologically plausible point mutations— and develop robust training techniques for variant prediction models to remain stable in real-world deployment.

Figure. Illustration of adversarial sensitivity in GFMs.

Related publication:

3. Unified Generative AI for Surgery and Rehabilitation

To build truly trustworthy agentic AI for clinical settings, we must go beyond performance— addressing privacy, generalizability, and robustness in embodied and interactive medical AI systems. Our lab explores how to integrate language models and sensor data while safeguarding patient confidentiality and minimizing information leakage.

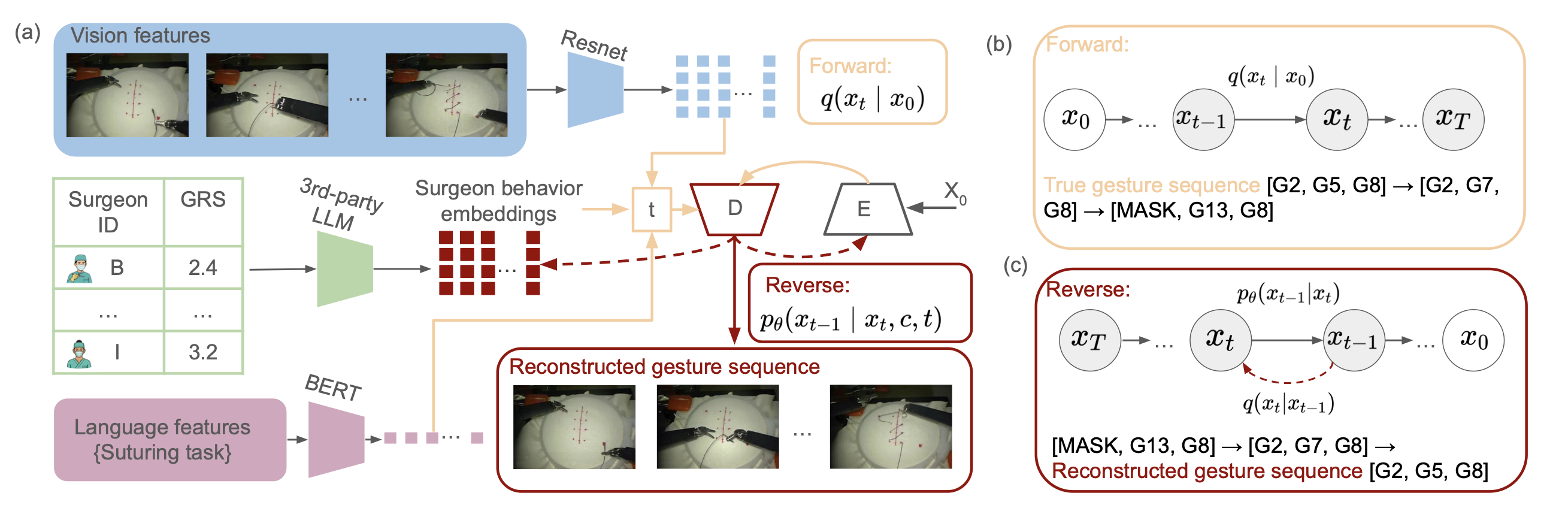

3.1 Diffusion-Based Multimodal Surgical Gesture Prediction

Surgical AI must reason over real-time data—often from cameras, wearables, and robotic devices—yet these streams can contain sensitive information. We study how to predict the next surgical gesture or subphase using privacy-aware multimodal modeling, ensuring data minimization and feature obfuscation without degrading performance. Our goal is to enable skill assessment, decision support, and AI-guided training while preserving surgeon and patient privacy.

3.2 Assistive Robotics for Rehab

We collaborate with clinicians and engineers to embed AI into rehab robotics. We use wearable sensor data and fine-grained action modeling to personalize rehabilitation plans for stroke and spinal injury patients.

Figure. Personalized gesture sequence prediction using diffusion models.

Related publication: